The New AI Arms Race Runs on Two Fuels: Electricity and Architecture

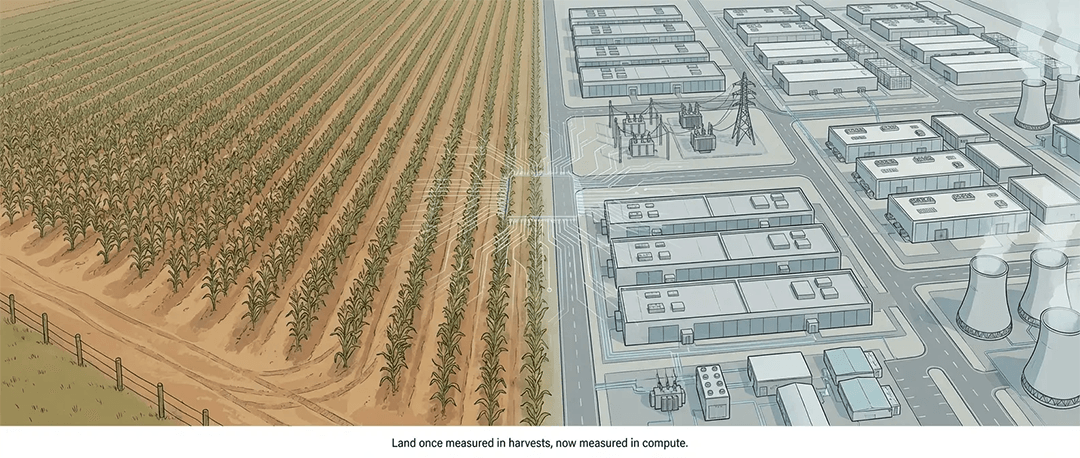

Cornfields to Compute Fields: Power, Silicon, and the New Industrial Geography of AI

Walk through almost any big AI story and you will still hear the old language. Models. Parameters. Benchmarks. “Who built the smartest brain.”

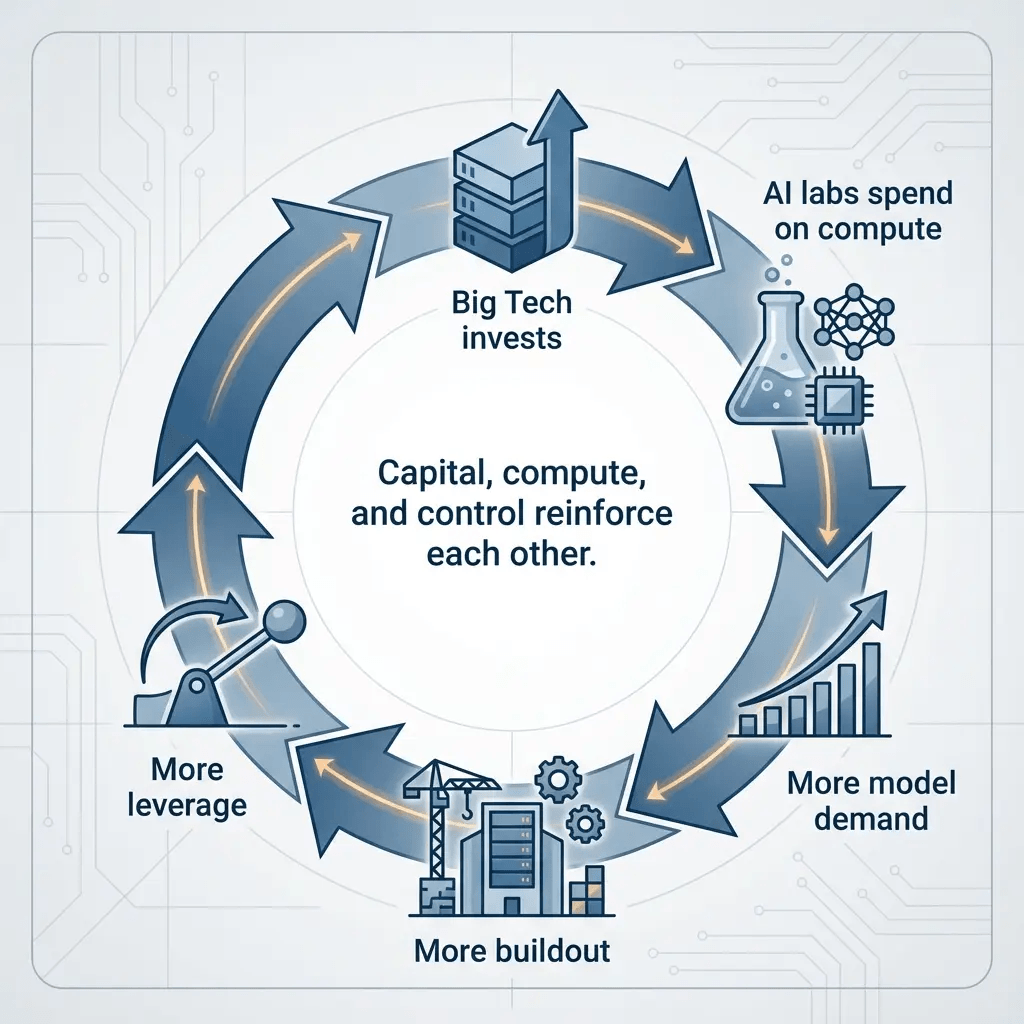

Yet the true contest has shifted under our feet. The winners will not come from the teams who write the cleverest code alone. They will come from the teams who can do two hard things at once:

1. secure absurd amounts of electricity, reliably, at scale, and

2. design an end-to-end machine that turns each watt into useful work better than the competition

That combination now defines the AI era’s industrial geography. Cornfields turn into compute fields because power lines, land, cooling, and logistics start to matter as much as math.

1) Electricity becomes the new scarce resource

AI used to scale like a software business. Add servers, add users, grow quietly in the background.

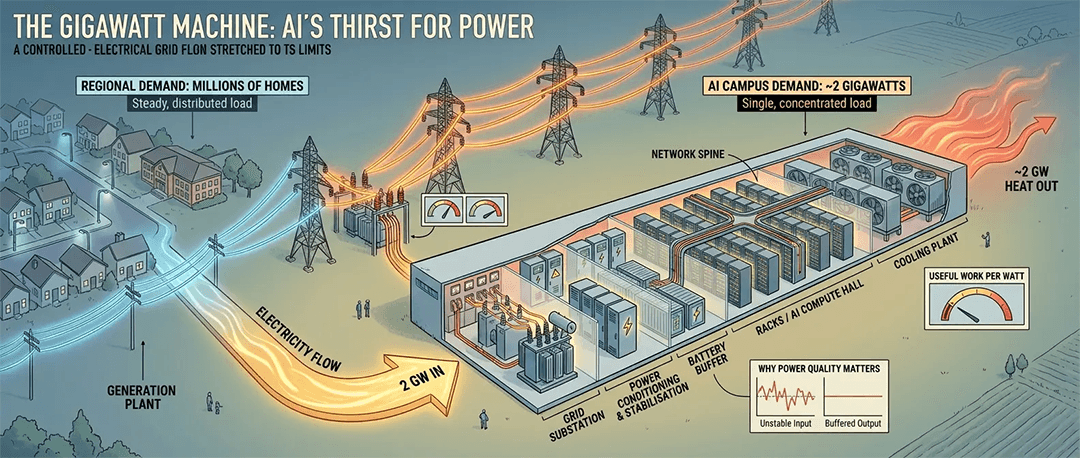

That story no longer fits. Modern AI training and inference can push into gigawatt territory, which forces a new kind of competition. Not everyone can pull that much power from a grid without years of planning, expensive upgrades, and political negotiation.

At this scale, electricity stops behaving like a utility bill. Electricity starts behaving like territory.

Land matters because you need space, setbacks, substations, redundancy, and expansion room.

Transmission matters because power must arrive where the land sits.

Timing matters because interconnection queues can move slower than model cycles.

Stability matters because the grid does not love sudden swings in demand.

So hyperscalers now behave less like “cloud providers” and more like energy developers. They push into long-term power arrangements, on-site buffering, and proximity strategies that place campuses near large, steady generation. In practical terms, they compete for the right to exist at the next scale.

2) The first battle does not happen inside the model

People still talk about “better models,” and that still matters.

But when you try to run AI at national-infrastructure scale, the first battle rarely happens inside the neural net. It happens in the system that feeds the neural net.

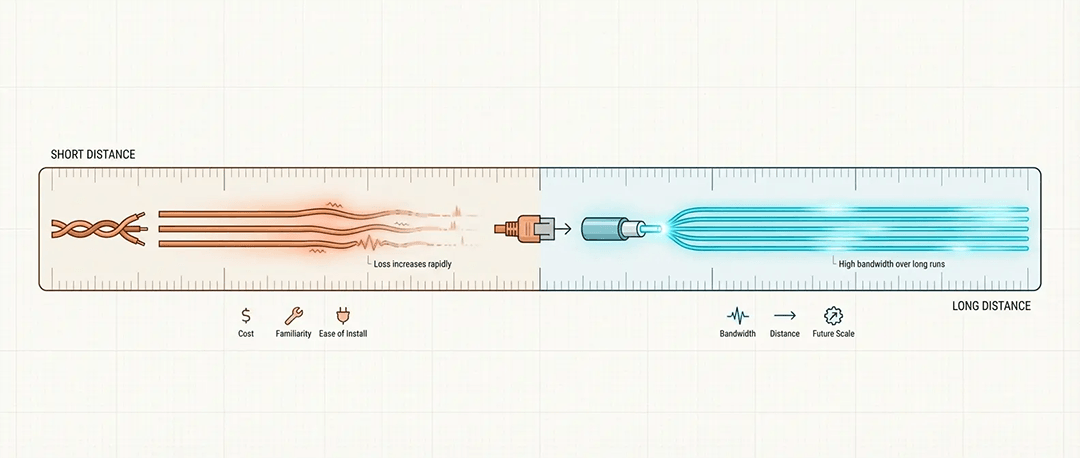

Electricity hits servers and turns into heat. Heat demands cooling. Cooling demands water, airflow, or both. Networking must move data with low latency and high bandwidth, without turning the building into a toaster. Reliability must keep long training runs from collapsing mid-flight.

If you do not win those battles, you cannot even reach the starting line of the model race.

So the competition tightens around a brutal question:

How much useful AI can you produce per megawatt, per dollar, per month of lead time?

3) The second fuel: architecture becomes the real differentiator

For years, the default recipe looked simple.

Buy GPUs. Pack racks. Scale out.

That pattern still works, but it now shows strain. Costs rise, supply bottlenecks appear in unexpected places, and one vendor ecosystem can start to feel like a strategic dependency rather than a convenience.

That pressure pushes the largest players toward system architecture as a competitive weapon.

Not “a chip,” but a whole machine.

custom accelerators tuned for training and inference economics

packaging choices that reduce supply fragility

network fabrics that trade elegance for speed of deployment, or vice versa

cooling strategies that trade water usage for energy draw, or the reverse

software stacks that keep the hardware productive rather than idle

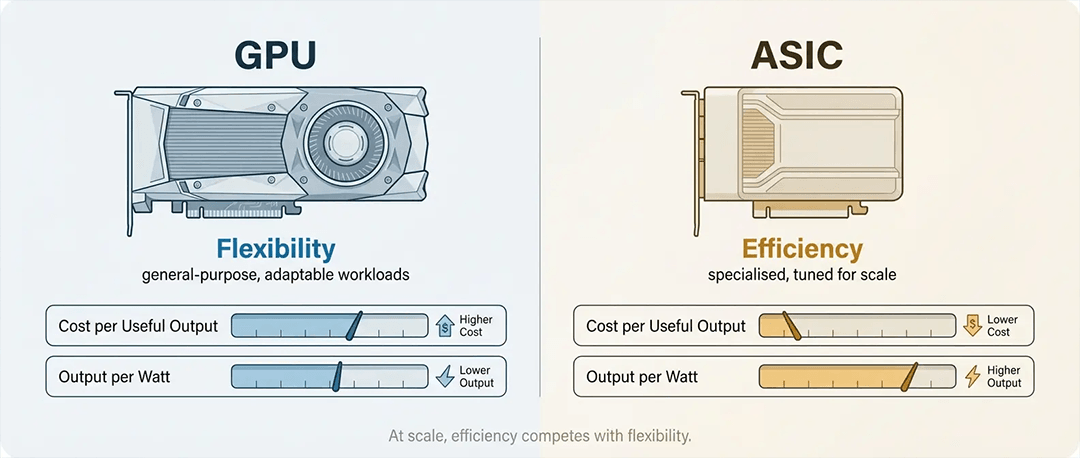

This shift explains why we now see two tracks running in parallel:

GPUs for flexibility, fast capacity, broad compatibility

custom accelerators for efficiency, cost control, and tighter integration

No one wants to bet on a single future. Everyone wants optionality. Everyone wants leverage.

4) Packaging, not wafers, can decide your schedule

One of the least visible constraints in AI sits inside manufacturing, not inside research labs.

Modern accelerators rely on advanced packaging and high-bandwidth memory integration. When packaging capacity tightens, clusters do not arrive on time. Even if you can buy the silicon, you might not be able to assemble it fast enough in the form your system requires.

So the competitive question shifts again:

Can your supply chain actually deliver your architecture at the speed your market expects?

Architectures that align to manufacturable realities can win, even when they do not top every benchmark chart.

5) Power and architecture collide in a single metric

People like to argue performance. They like to argue “my chip beats your chip.”

At scale, the argument collapses into a single number:

useful output per watt

This forces a change in how leaders think. If your system can produce more tokens, more training steps, or more completed inference jobs per megawatt, then you effectively create capacity without building a new power plant.

That advantage compounds. It also attracts capital, because efficiency turns directly into margin and expansion velocity.

So the AI race increasingly resembles an efficiency war fought with architecture, not a sprint fought with peak performance.

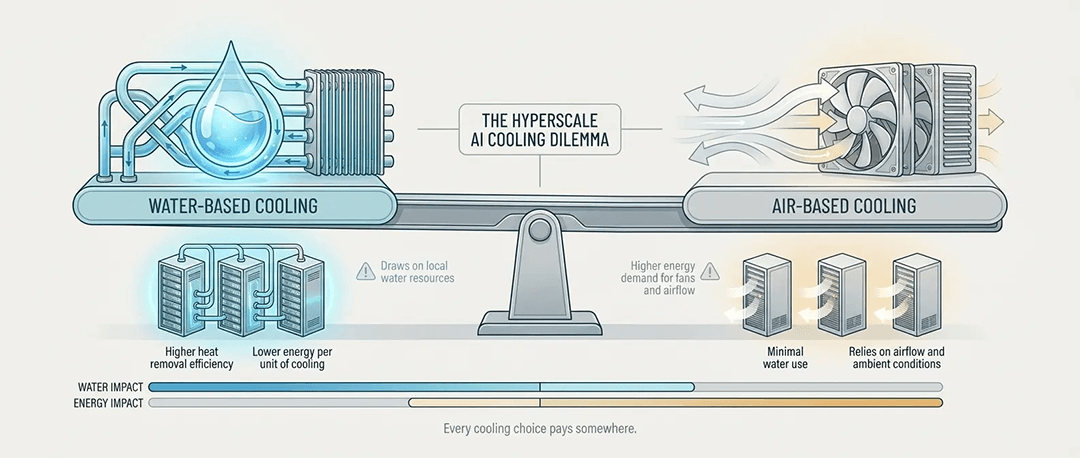

6) Cooling turns into politics

Cooling sounds like a data center detail until it touches real-world scarcity.

Heat removal can demand enormous water usage in some designs. Other approaches reduce water dependence by leaning on outside air and aggressive airflow, especially in colder months. Those choices come with tradeoffs like fan energy, noise, and constraints on density.

Every big AI campus thus negotiates with its environment.

protect water, accept higher energy draw

save energy, accept higher water impact

increase density, accept tougher cooling demands

reduce density, accept slower scaling

No design gets to escape the trade space. At gigawatt scale, physics collects its invoice.

7) The real winners will secure three things early

When you zoom out, the pattern sharpens.

The future winners in AI will not simply train the smartest models. They will:

1. secure power that stays steady and affordable

2. secure land and grid access that can scale

3. secure an architecture that converts those watts into output efficiently

Then they will align everything into one working system.

That alignment now matters more than slogans about “the cloud” or “AI transformation.” It shapes where investment flows, where jobs appear, and which regions become strategic infrastructure zones.

8) Cornfields to compute fields

A previous revolution taught humans how to feed themselves at scale.

This one pushes humans to feed machines at scale.

That statement does not rely on metaphor. It relies on megawatts, cooling loops, packaging lines, and network fabrics. The competitive race now runs through electrical capacity and system design, because those two fuels decide who can build, who can run, and who can afford to keep going.

© 2025 Stephen Bray. Patterns in life and business, simply told.